Game Engine: Bone Hierarchy

Bone hierarchy was implemented!

Bone hierarchy was implemented!

In real life bones are attached to each other. I have setup an attachment system, so the top bones

are children of the the bottom bones.

Thus moving a bottom bone moves the top bone, but not the other way around since the bottom is not a child

of the top.

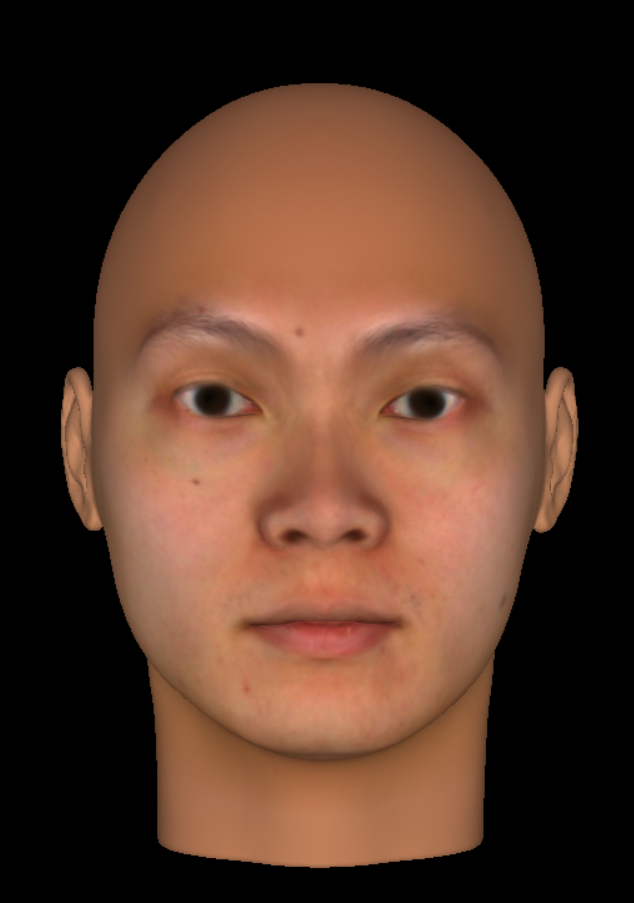

In-House Game Engine: Simple Bone System

I've created my first simple bone system. Right now it's a proof of concept. After OOP-ifying the vertice data of a model, I've set it up so that each mesh vertex (a mesh consists of floating infitimesnally small points, which are later filled into a solid-shape by the Render, i.e. the head you see)

has extra bone information, such as which bones "own" the mesh-vertice (are the parent to it), and the strength of this particular bone influence.

A bone is a single point with position and rotational data, it's bascically another vertice - in the math-sense of things.

A bone is parented to many mesh-vertices (of the model). Therefore when you move a bone, the rest of the model's vertices follow with it. Enabling the developer (me)

to move the a whole group of vertices in a coherent and very easy way once a bone-system for a model is set-up/loaded in.

In a more complex systmem - a bone can be parented to other bones, for example your wrist (which controls the hand movements, hand-bone) is parented to your elbow (lower-arm bone, which controls the movement of your forearm/lower-arm )

Meaning the lower-arm bone also moves the hand if the lower-arm is moved.

^^ In the 3D model sense -

this would mean the lower-arm moves all the mesh vertices directly parented to the lower-arm, AND it also moves the hand-bone -> WHICH IN TURN moves all the vertices parented to the

hand-bone.

These are how characters move in modern games since the late 90s (HL1 being one of the first to introduce skeletal systems in computer games).

For ragdoll physics, the physics simulation is in charge of moving the position and rotation of the bones. (Again, which in turn move all the mesh vertices parented to them).

Thus giving you the experience of a ragdoll.

Software architecture blurs into Game-World - Part 2

As I'm working on my Hl1-Postal2-Oblivion inspired in-house Game Engine. My main focus is human characters/NPCs.

I further realize that complex objects have many parts existing in a relationship with each other.

This reminds

me of that ancient Egyptian concept of a human soul containing many parts (8 or 9: Ba, Ka, Ren, etc)

likewise my NPC objects consist of many parts.

in my post below this one -- I think it's really fascinating when the architecture of a software

has a stronger coherence with the actual playable game world - a design where the software layers more tightly resemble each other.

So since the NPC object is made up of many parts in reality, so the player should be able to 'dismantle' NPCs and examine their parts in a fun gamey way while playing.

I'll probably achieve this with the use of some cool GUIs, visuals, and some gore effects, keeping in touch with Unknown Era's creepy surreal/alternate-reality vibe.

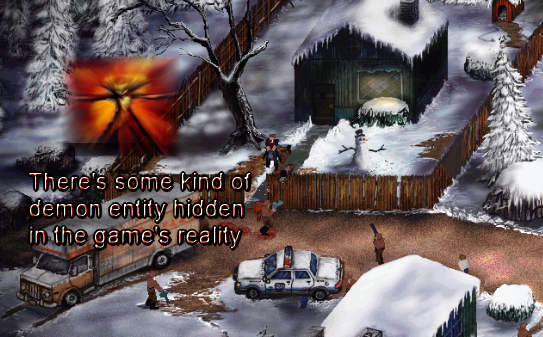

Postal 1: Software architecture blurs into Game-World

In Postal 1 there's an allusion that the main character is demon possesed. There's an ambiguity if Rick Hunter's voice lines are the MC's voice

or if it's the voice of the demon.

Rick Hunter's voice sound files are labelled as 'demon', and the MC's hurt and pain sounds are not in Rick Hunter's voice.

Additionally and most interestingly, there's an entity/object that is created when the game world initializes, it's the demon entity. It can be removed/destroyed

by modifying the game program, and removing it means Rick Hunter's voice no longer plays.

Yet the MC will still have hurt and pain sounds.

In layman's terms a demon would be consider an outside entity from a person - having an independant existence. And we can see that here in the game's own software architecture;

The demon is a seperate and distinct entity/binary object from the player - not part of the player object.

I wonder if it's locally inside the game world, like having

a spatial position inside, or if it's in some kind of primitive non-local space - adjadcent to the spatial game world your player plays in...

Hardware Register Pointer

When working with special hardware systems, the vendor can provide the specific physical memory location of special registers. In C++ you modify these

registers directly.

------------------

volatile uint32_t* hardware_register = reinterpret_cast

int main() {

uint32_t value = *hardware_register;

std::cout << "Register value: " << value << std::endl;

*hardware_register = 0x12345678;

------------

OpenGL Pipeline

OpenGL is a free 3D Rendering Pipeline that has been around since the 90s.

You start with a C++ OpenGL project and begin the Pipeline by creating an OpenGL context (Enviroment). Then you link the shader code (i.e. Vertex and Fragment) to the currently active Vertex/Fragment shader locations that your main program will access.

Binary Object Architecture

Binary Objs need a specific memory layout in order to be effectively read by the processors.

Windows follows the ELF (Exec and Linking Format) format, and names its

directly runnable binary objects as .exe.

There is a another binary object proprietary to Windows known as .dll that is meant to be loaded in by the .exe during runtime.

Dll's are used to minimize the memory size of a program by only loading in the needed binaries by specific events. Without Dll's we would need to

load in ALL the dependancies of a program on startup, instead of piece by piece as necessary.

The ELF architecture contains several memory-subsections to provide the infrastructure of a binary object (a header, and other subsections used for instructions and indexing).